Foundations of Multi-LLM Agent Collaborative Intelligence

The original Multi-LLM Agent Collaboration framework: pioneered since 2022

The MACI framework—Multi-LLM Agent Collaborative Intelligence—represents the culmination of a multi-year research effort across nine foundational pillars:

- Pillar I: Consciousness Modeling – foundational theory for reflective and multi-perspective reasoning (2020–2022)

- Pillar II: Critical Reading Analysis (CRIT) – reasoning validation and argument structure analysis (2023)

- Pillar III: Collaborative Multi-LLM Reasoning (SocraSynth) – structured debate with dynamic modulation of linguistic behavior—including emphasis, tones, and language patterns—to mitigate hallucinations and reduce biases (2023)

- Pillar IV: Behavioral Emotion Analysis Modeling (BEAM) – modeling and modulating linguistic behaviors based on foundational emotions (2024)

- Pillar V: Entropy-Governed Multi-Agent Debate (EVINCE) – quantitative modulation of dialogue via information theory (2024)

- Pillar VI: Ethical Adjudication (DIKE–ERIS) – culturally aware decision-making through dual-agent governance (2024)

- Pillar VII: Adaptive Multi-Agent System for Mitigating LLM Planning Limitations – overcoming Gödelian self-validation barriers through iterative “think-validation” loops and addressing attention/context window narrowing (2024)

- Pillar VIII: Transactional Long-Lived Planning (SagaLLM) – persistent workflows with memory and rollback (2025)

- Pillar IX: Unified Cognitive Consciousness Theory (UCCT) – finally, a theory that unifies System 1 and System 2 (2025)

These components collectively define a pathway toward reliable, trustworthy, and interpretable general intelligence through multi-agent LLM coordination.

Introduction

Building on foundational research from 2020 onward, the MACI framework—Multi-LLM Agent Collaborative Intelligence—offers a unified architecture for collaborative reasoning, ethical alignment, and persistent planning using large language models.

The following sections trace MACI’s evolution through nine major pillars, detailing the innovations that led from theoretical modeling to real-world orchestration.

2020–2022: Consciousness Modeling and Foundational Theory

MACI’s origins lie in the exploration of computational consciousness and multi-perspective reasoning, developed during the Stanford CS372 lecture series (2020–2022). These ideas culminated in the CoCoMo paper, which introduced a formal framework for modeling consciousness as emerging from automatic, reflective, pattern-matching processes—akin to an unconscious substrate. By probing the questions of what and where consciousness arises, CoCoMo articulated the transition between pattern recognition and conscious function, laying out a theoretical foundation for AGI. This foundational work established the epistemic framework for collaborative LLM behavior and seeded the architectural vision that became MACI.

2023: SocraSynth, CRIT, and the Birth of Multi-Agent Reasoning

Building on this foundation, 2023 saw the release of SocraSynth, a framework for orchestrating structured debates between LLMs. Rather than relying on a monolithic oracle, SocraSynth coordinates agents—each with a distinct stance or specialization—to conduct Socratic, adversarial-collaborative dialogue.

It operates in two phases:

- Generative Phase: LLM agents engage in structured discussion, critiquing and refining each other’s outputs.

- Evaluative Phase: The discussion is examined through scenario testing, counterfactual reasoning, and coherence validation.

This interaction rests on four design principles:

- Adversarial Multi-LLM Integration: Reduces hallucinations and encourages deep insight via dialectic tension.

- Conditional Statistics Framework: Assigns distinct analytic or ethical roles to agents for output diversity.

- Adversarial Linguistic Calibration: Modulates LLMs' linguistic behaviors along a spectrum—from contentious to conciliatory—to surface overlooked assumptions, uncover novel perspectives, and stimulate richer reasoning through stylistic contrast.

- Reflexive Evaluation: Applies internal consistency checks to ensure robustness before convergence.

In parallel, we introduced CRIT—the Critical-Reading Inquisitive Template—to model systematic evaluation of arguments. CRIT enhances reasoning coherence by issuing structured prompts to LLMs, guiding them through:

- Identifying key claims and evidence

- Validating reason-conclusion relationships

- Identifying missing counterarguments

- Evaluating citations and source trustworthiness

- Producing an overall document quality score with justification

CRIT was designed to embody the Socratic method, operationalizing critical thinking through layered inquiry. It complements SocraSynth by offering fine-grained argument inspection—essential for rigorous multi-agent evaluation.

2024: EVINCE – Quantitative Control of Multi-Agent Dialogue

While SocraSynth laid the foundation for structured multi-LLM dialogue, it relied on qualitative tuning of agent interaction—moderating debates between contentious and conciliatory tones based on human intuition or prompt engineering. In contrast, EVINCE—Entropy and Variation IN Conditional Exchanges—introduces a principled, quantitative framework rooted in information theory.

EVINCE modulates multi-agent discourse using metrics such as:

- Cross Entropy – to detect redundant or low-diversity responses

- Mutual Information – to measure inter-agent influence and convergence

- Divergence Scores – to maintain productive disagreement without incoherence

It enables LLMs to adopt non-default stances by manipulating conditional likelihoods, moving beyond maximum-likelihood generation to goal-specific exploration. Through dual-entropy optimization, EVINCE ensures idea diversity during early exploration and focused consensus during final synthesis. It also integrates CRIT-based validation modules to assess coherence, argument structure, and missing counterpoints.

2024: BEAM – Behavioral Emotion Analysis Modeling

As SocraSynth matured, we encountered the challenge of managing emotional dynamics in LLM-driven debates—particularly, how to model and regulate "contentiousness." To address this, we introduced the Behavioral Emotion Analysis Model (BEAM), which maps linguistic behaviors onto basic emotional spectrums such as "hate" and "love." BEAM allows for the modulation of emotional tone in text, enabling more effective control of argument style and intensity.

This framework is grounded in three core steps:

- Emotion Definition: Identifying and isolating basic emotions that can influence language generation and ethical outcomes.

- Quantification and Ethics Mapping: Training models on emotionally varied text samples to learn and modulate emotional expression within ethical boundaries.

- Testing and Adaptation: Deploying BEAM in real-world scenarios (e.g., multimedia generation) to evaluate emotional consistency, safety, and user reception.

BEAM integrates insights from cognitive psychology and Bayesian conditioning to demonstrate how in-context learning can shift the emotional valence of LLM outputs. It directly laid the foundation for DIKE–ERIS by enabling ethical adjudication through emotion-sensitive editing—such as transforming hate speech into neutral statements by modulating underlying affective cues. This paved the way for dynamic linguistic calibration in EVINCE and ethical safeguards in DIKE–ERIS.

2024: DIKE–ERIS – Ethical Adjudication via Deliberative Dual-Agent Design

While EVINCE governs epistemic diversity and convergence, DIKE–ERIS addresses the challenge of ethical alignment. Inspired by constitutional design, it implements a deliberative framework composed of two specialized agents:

- DIKE – Advocates for fairness, regulation, and normative consistency

- ERIS – Challenges assumptions, introduces cultural context, and promotes dissent

Rather than hardcoding moral rules or relying on static alignment scores, DIKE–ERIS ensures ethical soundness through internal deliberation. Outputs are reviewed from multiple perspectives and scored based on fairness, inclusiveness, and context sensitivity. The result is a dynamic ethical process—not a fixed standard—adaptable to domain, audience, and evolving cultural norms.

This adjudicative model extends MACI’s capacity from “what is true?” to “what is just?”, enabling AI agents to deliberate not only toward correctness, but toward socially responsible conclusions.

2025: SagaLLM – Persistent Memory and Transactional Planning

In 2025, MACI matured into an execution-ready platform, capable of sustaining long-term planning, persistent memory, and failure recovery. We introduced SagaLLM, a transactional orchestration system based on the Saga pattern from distributed databases.

SagaLLM equips multi-agent LLM systems with:

- Spatial-Temporal Checkpointing: For reversible state recovery and reliable execution.

- Inter-Agent Dependency Management: Tracks interlocked tasks and alerts agents to contradictions.

- Independent Critical Validation: Uses CRIT-like lightweight agents to verify decisions at key junctures.

These mechanisms collectively enable:

- Transactional Consistency with compensatory rollback logic

- Robust Validation against drift and incoherence

- Scalable Performance through modular planning

- Integrated Intelligence grounded in both language and system-level reliability

SagaLLM closes the gap between AGI vision and real-world execution, allowing collaborative systems to function reliably over extended durations, across high-stakes domains like medicine, law, and infrastructure.

Beyond 2025: (UCCT) Breakthrough and the Path Toward General Intelligence Revealed

Some AI leaders, including Yann LeCun, have argued that LLMs cannot achieve AGI due to their limitations in memory, planning, and grounding. MACI does not dispute these critiques—it answers them by orchestrating collaborative, role-specific, critically validated agents in a structured framework. The United Cognitive Consciousness Theory (UCCT), developed in Q2 and published on [arXiv] in June, provides a critical framework to explain the foundational role of LLMs as pattern repositories, with conscious collaboration through semantic anchoring serving as the catalyst to bring us toward realizing AGI. The role of LLMs cannot be diminished, as all issues with memory, advanced sensing, and world grounding can be learned and built upon the LLM foundation.

MACI is deeply interdisciplinary, drawing on:

- Cognitive Psychology: for modeling attention, memory, and self-awareness

- Philosophy: for truth, meaning, and epistemology

- Physics: for entropy-informed reasoning and system equilibrium

- Computer Science: for orchestration, retrieval, and planning

Modern LLMs already demonstrate multimodal capabilities. Under MACI, these capabilities are harmonized—enabling agents to integrate data from telescopes, microscopes, quantum sensors, and human conversations. Intelligence, in this setting, is no longer a property of an individual model—it emerges from dynamic orchestration, critical reflection, and epistemic balance.

MACI: Intelligence through Orchestration, Not Isolation

The MACI project continues not merely as a technical initiative, but as a philosophical and scientific journey. It redefines artificial general intelligence not as the product of one supermodel, but as the collective potential of systems that:

- Balance divergence and convergence

- Reason across modalities and cultures

- Reconcile disagreement through discourse

Please visit this site regularly for updates as we refine MACI, contribute to open research, and build toward a responsible and collaborative AGI future.

These components collectively define a pathway toward robust, trustworthy, and interpretable general intelligence through multi-agent LLM coordination.Publications in 2025

8. *The Unified Cognitive Consciousness Theory for Language Models

The Unified Cognitive Consciousness Theory for Language Models: Anchoring Semantics, Thresholds of Activation, and Emergent Reasoning

Edward Y. Chang, June 2025.

7. *Persistent Memory for Long-Lived Workflow Planning

SagaLLM: Context Management, Validation, and Transaction Guarantees for Multi-Agent LLM Planning

Edward Y. Chang and Longling Geng, VLDB, September 2025.

6. *New Approach to AI Ethical Alignment

Emotion Regulation as Ethical Alignment: A Checks-and-Balances Framework

Edward Y. Chang, ICML, July 2025.

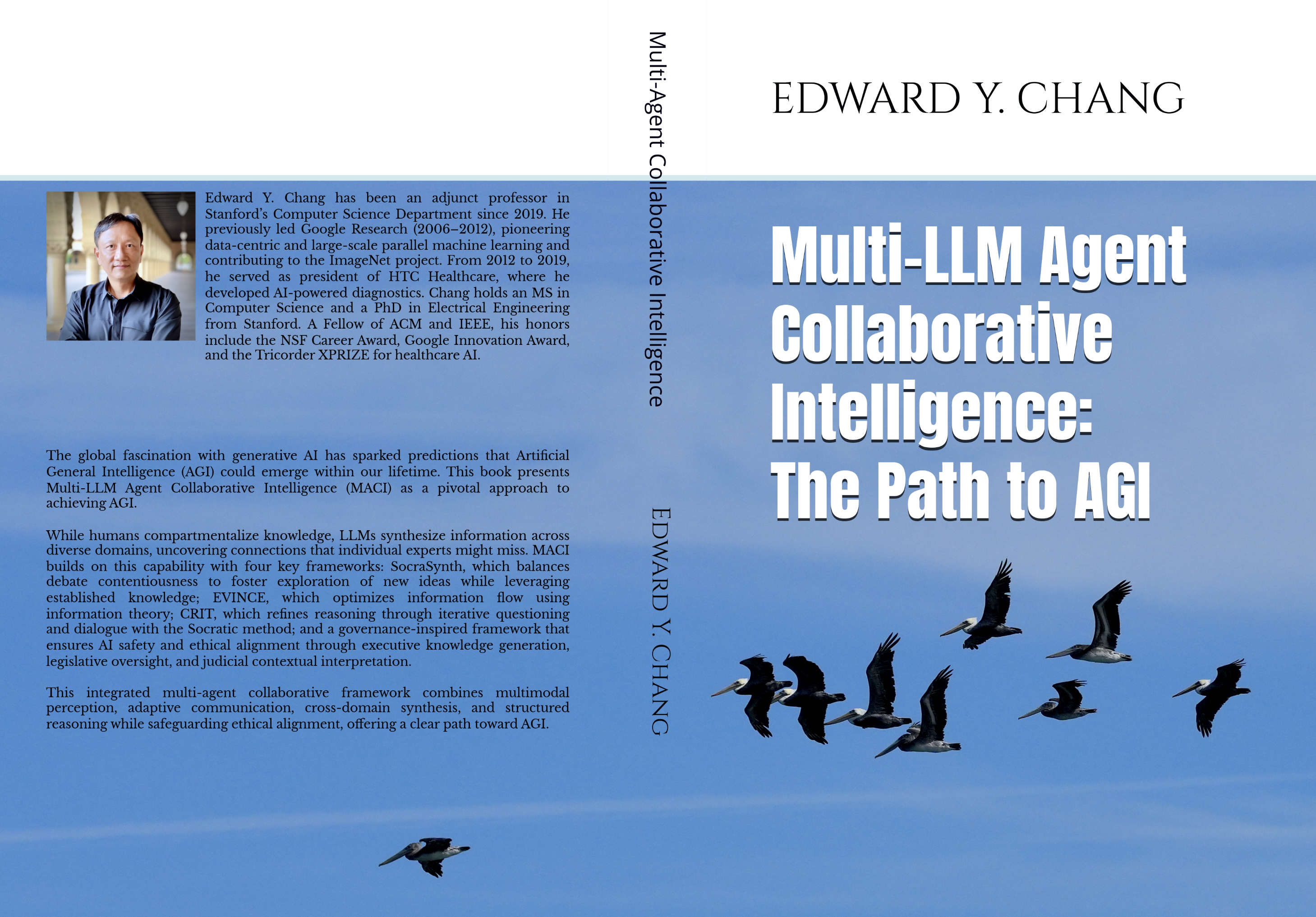

5. *Textbook, The Path to Artificial General Intelligence

Multi-LLM Agent Collaborative Intelligence: The Path to Artificial General Intelligence

Edward Y. Chang, October 2025, ACM Books.

4. *Establishing theoretical foundation for multi-LLM joint prediction

Multi-Agent Collaborative Intelligence: Dual-Dial Control for Reliable LLM Reasoning, 2024-2025 (under review).3. Benchmark for Evaluating Multi-Agent Systems

REALM-Bench: A Real-World Planning Benchmark for LLMs and Multi-Agent Systems, ACM KDD, November 2025.

2. Multi-Agent System for Planning

Multi-Agent Collaborative Intelligence for Adaptive Reasoning and Temporal Planning

Edward Y. Chang, January/May, 2025 (under review)

1. Checks and Balances for AI Ethical Alignment, as RLHF Fails

A Three-Branch Checks-and-Balances Framework for Context-Aware Ethical Alignment of Large Language Models

Edward Y. Chang, NeurIPS AI Safety, December 2024.